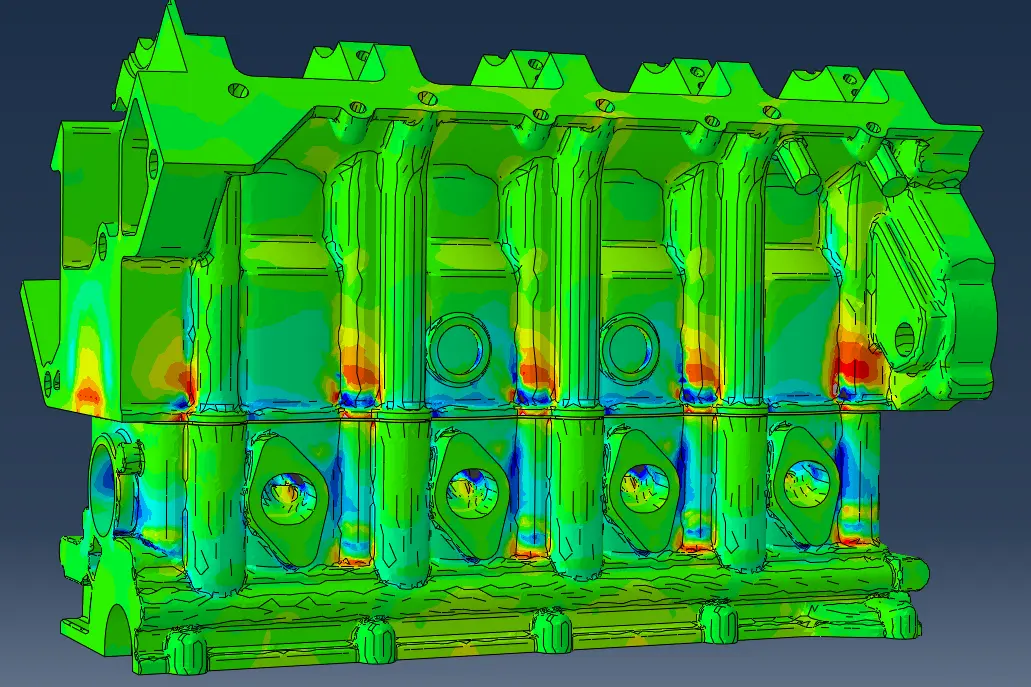

Hardware for Abaqus (and other FEA software) in 2025

After years of using workstations and laptops for Finite Element Analysis (FEA), I've seen a lot of hardware come and go. If you're like me, you've probably read the same guides and forum posts, all pointing towards a single solution for serious simulation work: an Intel Xeon-based system (or more recently, AMD EPYC systems).

However, consumer hardware marketed towards productivity (e.g. video editing) and gaming also pack a punch, and are generally more affordable.

I’m writing this to share the system I ended up with after quite a bit of research and to detail the specific configuration I now use and recommend for Abaqus, Ansys, or any other demanding FEA package. It’s a setup that has delivered better performance and value than most of the builds found online.

Rethinking the Conventional (and expensive) Wisdom

The argument for a Xeon processor, ECC memory, and enterprise storage is solid. The core counts are high, the platform is stable, and you can fit huge amounts of memory. But when you look at the raw performance data, modern desktop hardware now also come with 16 cores, boasting high clock speed & memory bandwidth.

In my typical workflows, models can either run on a "low" amount of cores (4 - 8 - 16), or benefit from a huge amount (somewhere between 128 - 256 cores). Personally, I don't see a big use for core counts between 30-100, while for these 100+ core counts, cloud computing is a next logical step.

Obviously your licensing options play a big role here, no point in having cores you can't use!

My Go-To Hardware Configuration for FEA

This is the configuration that I’ve ended up with, which hits the sweet spot for performance, capacity, and was within my budget.

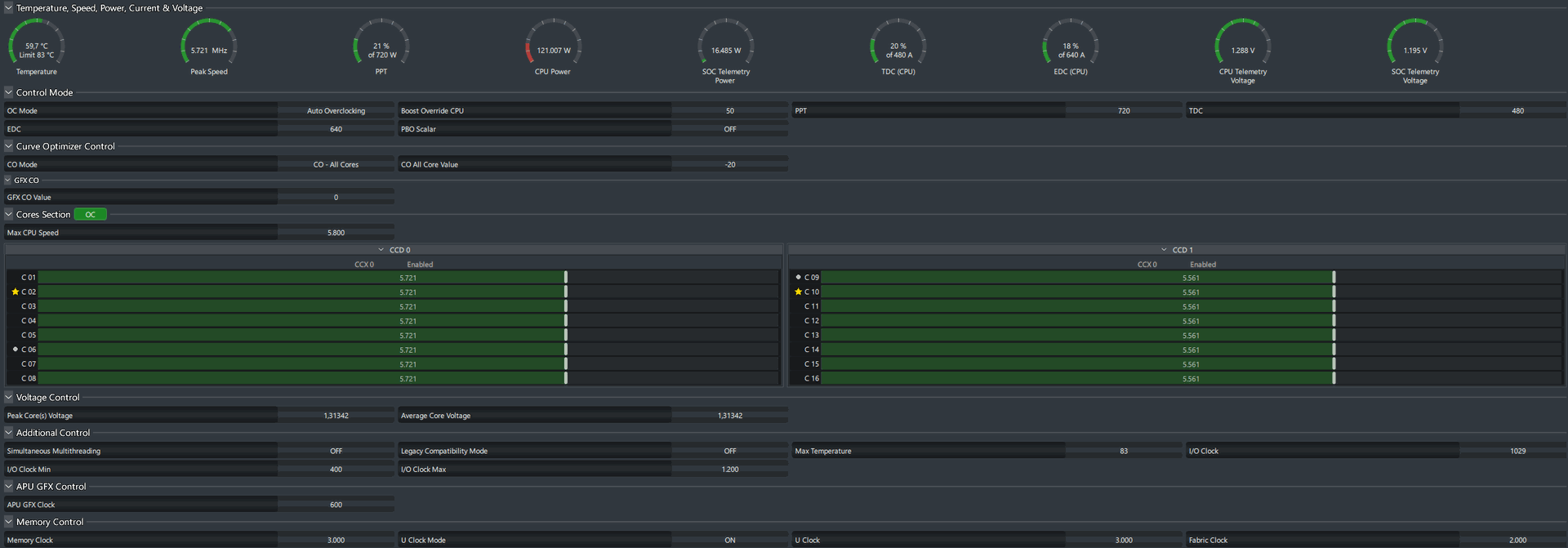

- CPU: AMD Ryzen 9 9950X (slightly overclocked and undervolted)

- RAM: 96GB (2x48GB) DDR5 6000MHz (overclocked with XMP)

- Storage: 2x 2TB PCIe 4.0 NVMe SSDs (configured in RAID 0)

- Motherboard: A quality X670E or B650E motherboard should work, I went with a more expensive X870E to (hopefully) be a bit more futureproof.

- GPU: A second hand NVIDIA GP100 - likely upgrading in the future.

Here’s the breakdown of why I’ve landed on these specific parts through my own testing and use.

Ryzen 9950x

The CPU is the most critical decision. My current workstation is built around an AMD Ryzen 9 9950X, and the performance difference has been significant. To put it in perspective, let’s look at how it stacks up against a comparable workstation/server-class chip, the Xeon W5-2465X (which retails at about twice the price), which is recommended for this type of work.

Feature | AMD Ryzen 9 9950X | Intel Xeon W5-2465X |

Cores | 16 | 16 |

Max Boost Clock | 5.7 GHz | 4.7 GHz |

L3 Cache | 64 MB | 30 MB |

TDP | 170W | 200W |

Memory Support | DDR5 up to 5600MHz (OC to 6000 possible) | DDR5 up to 4800MHz |

Platform Cost | Lower (AM5 motherboards) | Higher (W790 motherboards) |

My experience moving to the Ryzen 9 9950X has shown a few clear benefits in my day-to-day work:

- Higher Clock Speeds Matter: The 1.0 GHz advantage in boost clock isn't just a number on a spec sheet. I've found it scales about linear with run times. The 9950X also has quite some margin to be overclocked (and undervolted to keep temperatures down)

- Cache is King: The larger 64MB L3 cache on the Ryzen chip should be beneficial for solving FEA. With that in mind, the 9950X3D (not available when I build my system) should probably be preferred, especially when you run jobs on 8 CPU's.

- The ECC Question: One argument for the Xeon (or Epyc or Threadripper) has been its support for Error Correcting Code (ECC) RAM. While I appreciate the peace of mind ECC provides, the practical reality is that modern DDR5 from reputable brands is incredibly stable. In all my years running long simulations on non-ECC systems, I have yet to have a crash that I could definitively attribute to a random memory bit flip. For a workstation, I've concluded that the significant performance and cost benefits of the Ryzen platform outweigh the theoretical risk that ECC mitigates. (Also have you seen the price of ECC RAM?)

RAM: More Than Just Capacity

FEA simulations are memory hogs, but I've learned that speed is just as important as size.

I’ve found that using 6000MHz DDR5 provides a measurable improvement in solve times over the slower 4800MHz kits common in many pre-built workstations. My hypothesis is that the increased memory bandwidth allows the processor to stay fed with data, which is especially critical during the matrix-solving phase of a simulation.

I've settled on 96GB (2x48GB) as the ideal capacity. Obviously this depends on your specific situation and models, but for me I've yet to run out. Keep in mind my biggest models are usually Explicit models, where RAM is not the bottleneck.

Sticking to two DIMMs instead of four leads to a more stable system, especially when running memory at these higher frequencies. Online resources show that it's difficult to get 4 sticks of RAM running at 6000MHz

Storage: Eliminating the I/O Bottleneck

You can have the fastest CPU and RAM in the world, but if you're reading and writing from a slow drive, your entire workflow will feel sluggish. Switching to PCIe 4.0 NVMe SSDs was a no brainer. For maximum performance, I run two drives in a RAID 0 configuration. PCIe 5.0 NVMe's should soon becoming more feasible. Personally I didn't want to deal with the headache of the higher temperatures, but newer drives keep performing better on that end.

SMT / Hyperthreading

Simultaneous Multithreading (SMT in AMD systems) or hyperthreading (Intel systems) should be turned off. Normally quite easy to do in your BIOS (different for every manufacturer). I've tested it and running on 32 threads (needing 32 licenses) is (for most cases) slower than using 16 cores with SMT turned off.

You need to set very specific Abaqus configuration variables to even be able to run 32 threads, but since there's not point I won't waste time explaining how.

Operating system

I'm running a dual boot Windows 11 / Ubuntu 24.04. There seems to be no difference in runtime between the two.

CCD0 CCD1

The Ryzen 9 9950X has 2 CCD's with 8 cores. The CCD0 is the better performing one, and goes to higher clock speeds. When running simulations on 8 or less CPU's, it is beneficial to let them run on the CCD0. For this set the CPU affinity of your process to cores 1-8. This is easier in Linux, in Windows there are downloadable programs that enable to set the affinity, even after reboots.

BENCHMARKS

There are some benchmark results to be found online. I've used the s4b model. It's not huge, but does have 5M Degrees Of Freedom (DOF). To get the file, run:

abaqus fetch job=s4b.inp

In this forum 'anfreund' gives 5 results. This provider of HPC solutions also gives some results for Cascade Lake and Ice Lake, which are identical to those of the forum.

My results are the bottom two. I've also recently used a cloud service with AMD Epyc 7H12 processors, which I estimate is about 3x slower (with equal amount of cores) - didn't run the benchmark s4b (yet).

Processor | Cores | Wallclock time |

2x Intel Xeon Scalable 6226R processors (Cascade Lake Refresh) | 32 | 309s |

2x Intel Xeon Scalable 8380 processors (Ice Lake) | 32 | 203s |

Intel core i9 13900K | 8 | 318s |

Intel core i9 13900K + 1x K6000 | 8 + 1 GPU | 226s |

2x Intel Xeon E5-2687W v2 | 8 out of 16 used 10 out of 16 used | 678s 605s |

AMD Ryzen 9950x | 16 | 216s |

AMD Ryzen 9950x + NVIDIA GP100 | 8 + 1 GPU | 226s |

As you see, the 16 core Ryzen almost matches the 32 core (with higher license cost!) Xeon Ice Lake system. The intel i9 scores the same for 8 + 1 GPU (but the Ryzen has 16 cores to play with).

If anyone wants to let me know their results, contact me through e-mail or the contact form on this website.

(Especially if you happen to run a Threadripper!)

Snapshot of system details during the s4b model run